What Is Text Classification? The Definitive 2025 Guide

Text classification is a powerful use case of machine learning. But what is it? How does it differ from other AI/ML terms like image classification, sentiment analysis, or natural language processing?

In this article we’ll answer these questions, alongside how it works, how you can easily create your own model, and what the top 5 text classification tools are.

Table of Contents

- What is text classification?

- Why would I use text classification?

- What are the types of classification?

- How do I launch my own text classifier?

- 5 best text classification solutions

What is text classification?

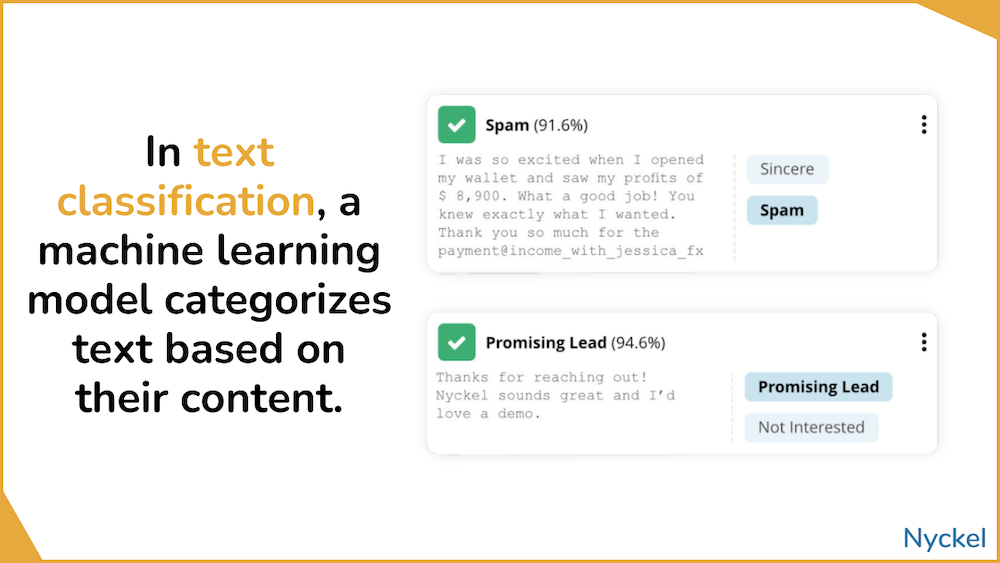

Text classification is the process of categorizing text into different groups (classes) based on its content. It involves machine learning algorithms — specifically deep learning models like Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs) — that can identify patterns within the text.

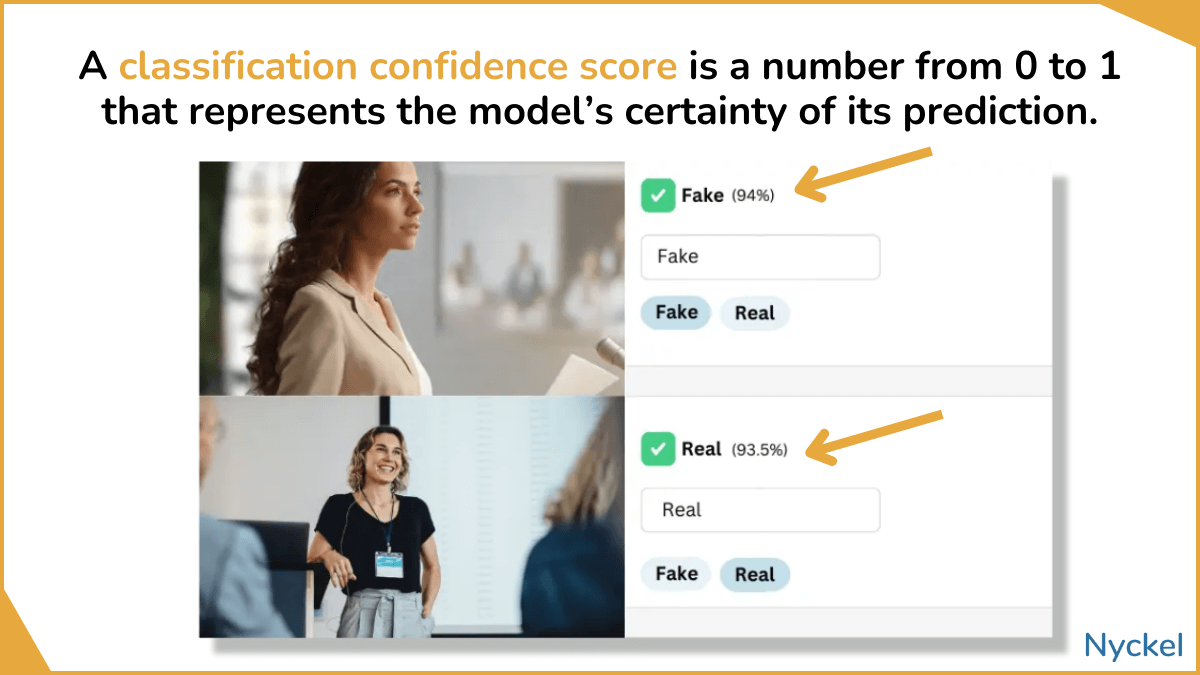

These models are generally built using prelabeled (annotated) training text. Once trained, you can feed the model new, unseen text snippets and have it automatically classify them. Along with the prediction, models will provide a confidence level that ranges from 0 to 1 and highlights how confident the model is that it’s right (the higher, the more confident).

Why would I use text classification?

Text classification allows you to quickly mine data to understand sentiment, intent, category, and so on.

Doing this manually would be time-consuming, if not impossible. For instance, combing through 10,000 emails, each with an average of 50 words or more, could take weeks, or even months.

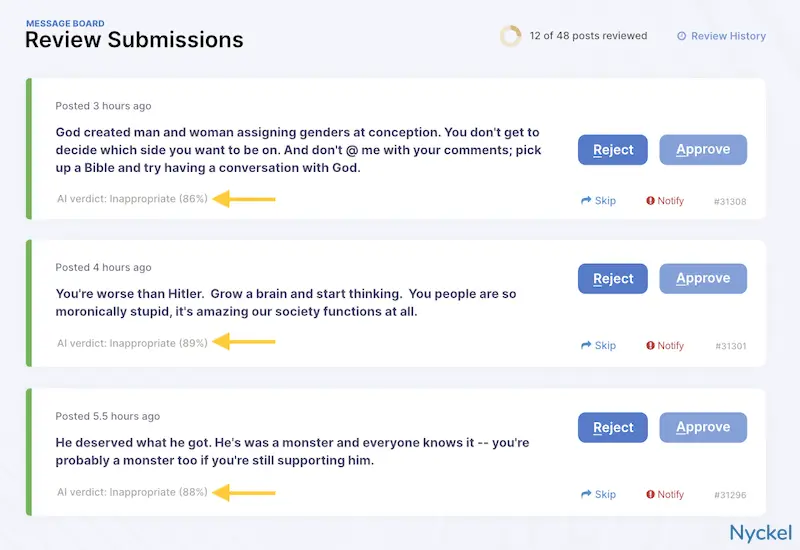

And for situations like content moderation - where you want to flag and remove offensive comments within minutes - automation is a necessity.

Let’s say you work at an online banking company. You want to analyze support tickets to understand your product’s biggest pain points (money transfers, deposits, etc.). You could manually review every ticket - or ask the support team / customers to label them themselves - but both paths are slow and error-prone. You could instead build a machine learning model that can ingest the tickets and automatically identify the most applicable category.

Text classification is used by many other industries too:

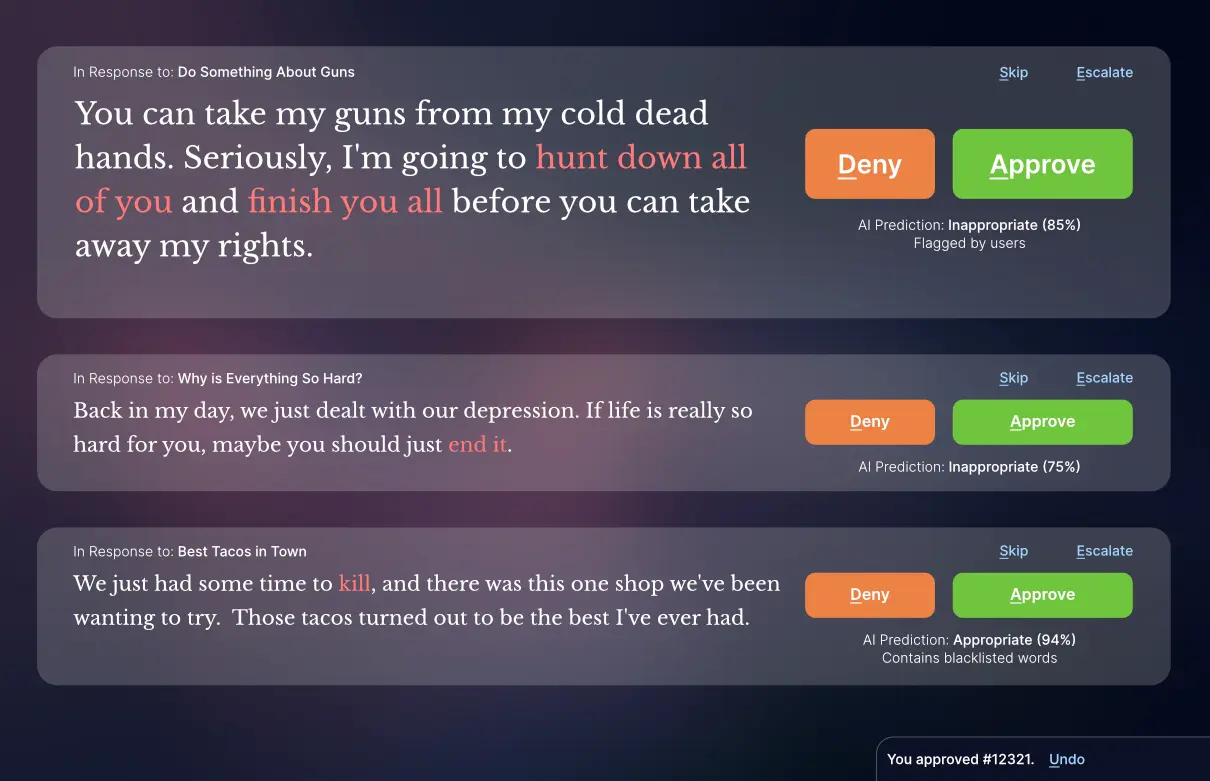

Content Moderation

Many brands, from online communities to dating services to social networks, use text classification to auto-flag any messages that violate their rules. Certainly offensive text comes to mind, but brands may also have custom rules, such as a pet marketplace flagging posts that insinuate illegal dog breeding practices.

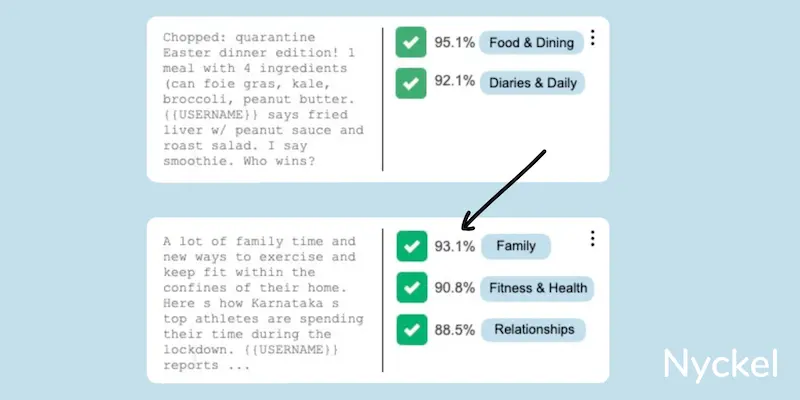

Category Tagging

Text classification is great at identifying contextual themes, like whether a website’s content is food-related, sports-related, etc. The advertising tech industry uses such models to determine how much to bid for an ad placement. DoorDash, for instance, will likely pay more to appear on food-related websites vs car-related ones.

Text classifiers help these companies automatically tag articles with the right content category, enabling them to earn more money from each impression.

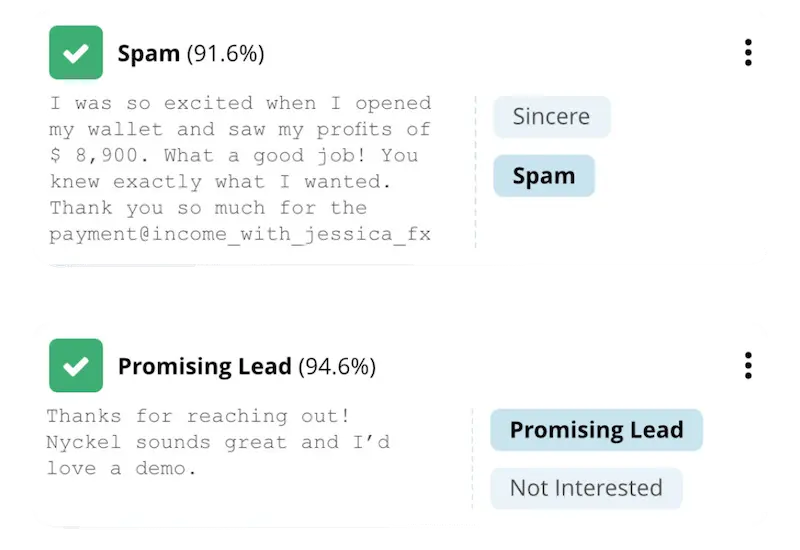

Spam Identification

Many services, especially social networks and directories, use text classification to find and remove spam or fraud.

Gust, for instance, is a directory that helps startups reach angel investors. But, like many directories, they are plagued by spam listings. To fight them, they use machine learning to analyze new profiles and determine, in real-time, what is real.

Text Sorting

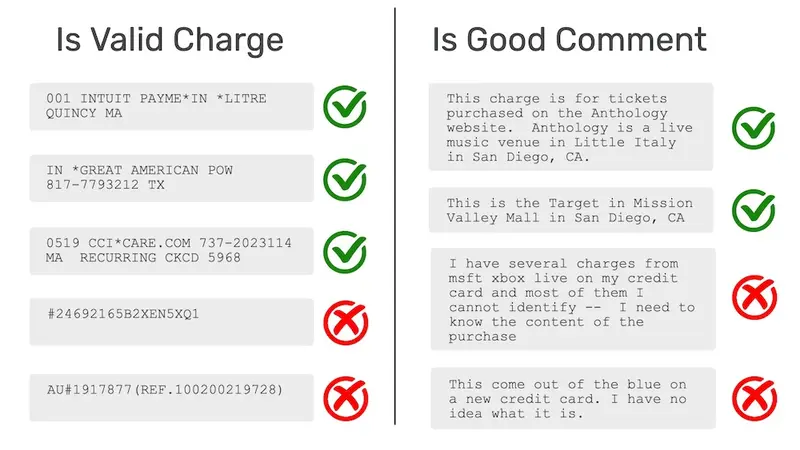

What’s That Charge identifies the sometimes mysterious charges on one’s credit card statement. Users upload their charges, and WTC tells them who it’s from. But users often upload text that aren’t charges at all. To address this, WTC uses text classification to determine if a given input is a charge or a meaningless string.

File Analysis

Text classification helps organizations analyze document repositories and identify content categories automatically. By processing large volumes of text data, these systems can categorize documents by department, purpose, or sensitivity level without manual review. This capability is particularly valuable for detecting Shadow IT, when employees use unauthorized software or services.

Brand Sentiment Analysis

Sentiment analysis is a common use case for text classification too. Here, models will categorize text as positive or negative (and/or use grandients, such as neutral, love, hate, etc.). Such tools help brands monitor what people are saying about them.

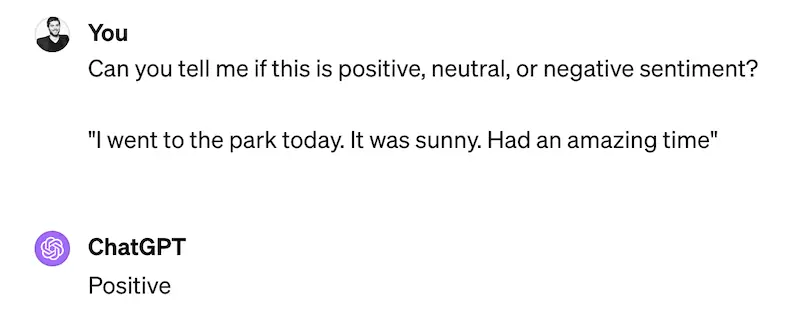

Want to test a sentiment analysis model? Input text into the below box, and it will classify it as positive, negative, or neutral.

How does text classification differ from other machine learning terms?

The machine learning space can certainly be confusing. Below defines other relevant concepts.

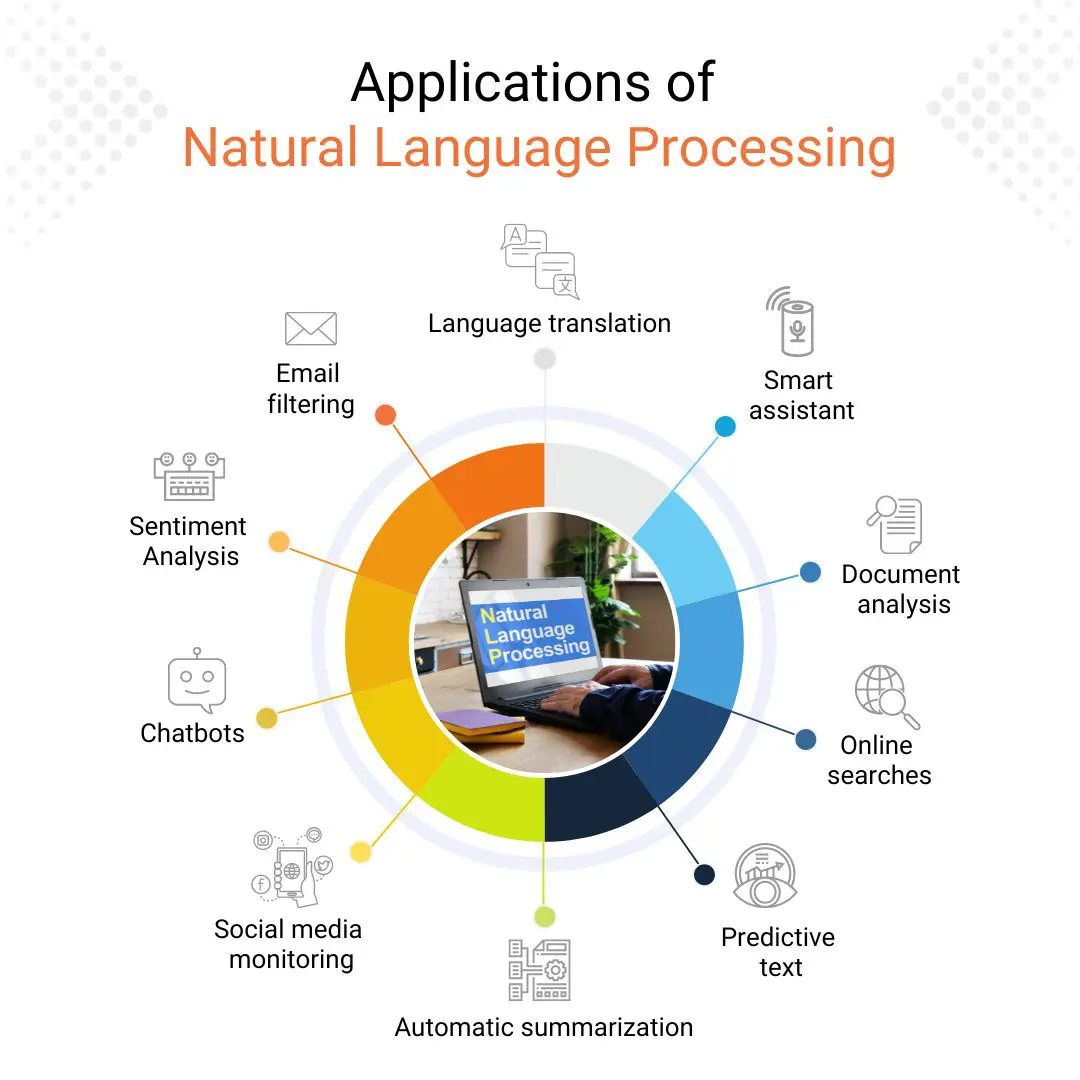

- Natural Language Processing - Natural Language Processing is a field that encompasses a range of tasks related to both understanding (analysis, classification) and generation (creation, synthesis). Text classification falls under this catch-all, but it also includes tasks like summarization, translation, question answering, text parsing, and more.

- Text mining - Text mining is a broad term for analyzing text. It can include text classification, as well as tasks like parsing data within the text, clustering by theme, and labeling individual words within the text (versus text classification, which classifies the text as a whole).

- Topic and document clustering - Typically, “text classification” refers to a supervised form of classification that involves predefined labels. In contrast, “topic clustering” or “document clustering” refers to an unsupervised approach, where the model groups the text by similarity, using rules it defines. It’s great for quickly finding themes across large textual datasets.

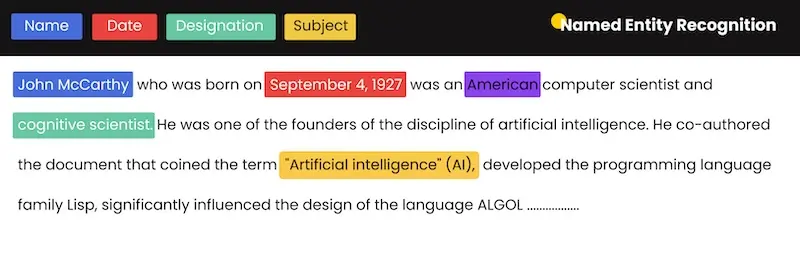

- Named Entity Recognition (NER) - Named Entity Recognition involves classifying specific words or phrases within the text.

- Information Retrieval (IR) - Information Retrieval is the process of pulling relevant info from a large repo using queries. It’s a form of search, not mining or classification.

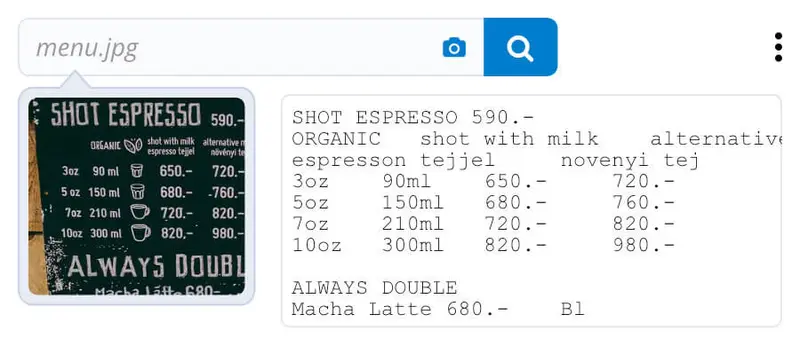

- OCR (Optical Character Recognition) - OCR extracts text and numbers from images. It straddles both computer vision and natural language processing.

How does text classification differ from image classification?

Image classification is like text classification but involves, not surprisingly, images. Image classification falls under Computer Vision, a field that enables computers to understand visual information.

With image classification, the model will analyze the image as a whole, and then assign it to a predetermined label. For instance, you could have a recycling identifier that ingests an image and tells you if it’s recyclable.

Use cases of image classification include:

- Image content moderation - Classifiers can analyze images for offending material, from NSFW content to SFW images that still violate their terms.

- Product tagging - Models automatically tag an image with the right clothing type, style, colors, etc.

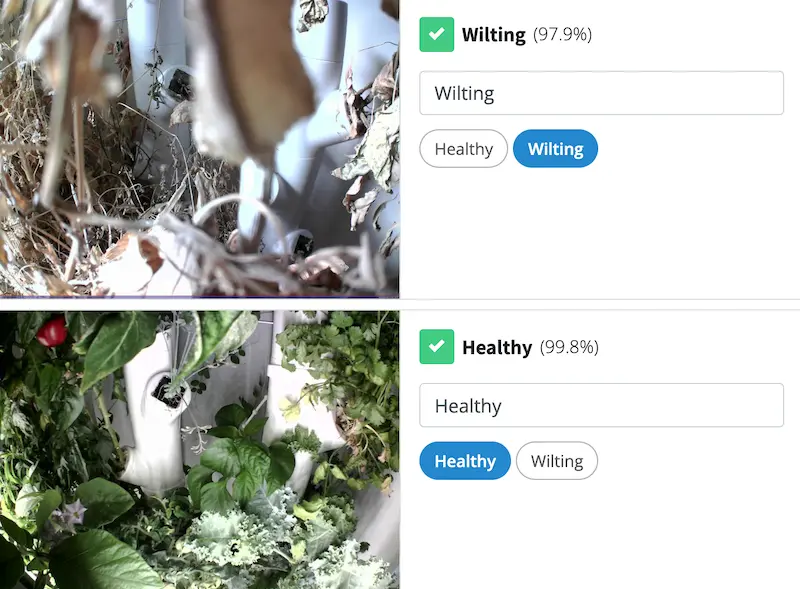

- IOT observation - Companies like Gardyn use image classification to analyze the images their IOT devices take.

Is text classification considered to be AI?

Yes, text classification is considered to be a task within the field of Artificial Intelligence (AI).

AI is a broad-reaching term and includes Natural Language Processing, machine learning, and, therefore, classification.

Is text classification what ChatGPT does?

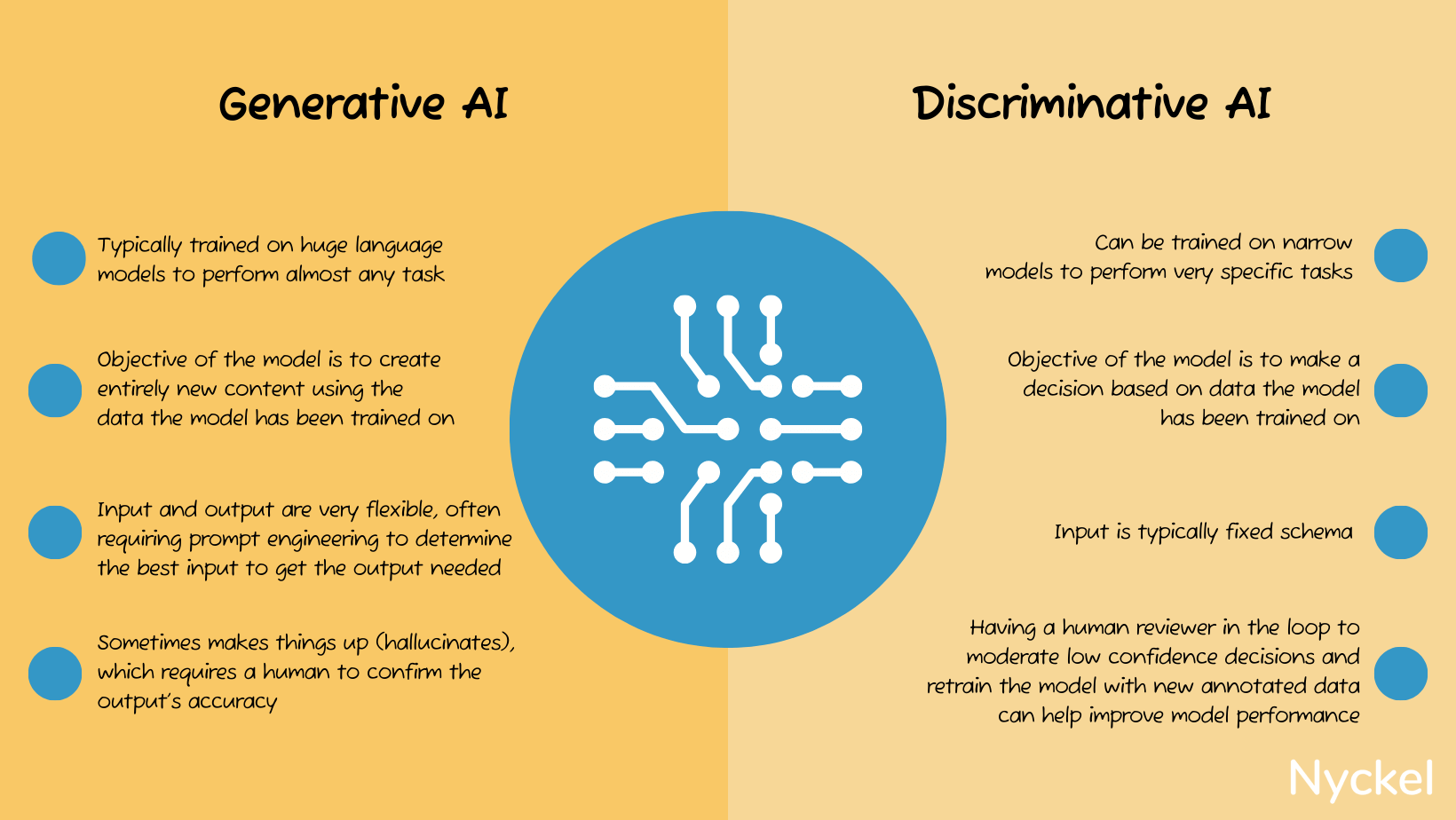

Yes and no. AI, at a high level, can be separated into two areas: discriminative and generative. Generative AI refers to AI that can create new content, while discriminative AI will identify elements within data. Text classification falls into the latter, while ChatGPT’s main use case is generative.

So when someone uses ChatGPT, they are likely using it for generative purposes, like asking it to write a poem about their cat.

That said, ChatGPT has built-in classification abilities, particularly for commoditized datasets like offensive words.

Where ChatGPT struggles is custom use cases. What may be banned on one site is allowed on another, but ChatGPT won’t intuitively understand these nuances. Fine-tuning ChatGPT for your use case can be cumbersome, if not impossible.

Do I need machine learning experience to build a classification model?

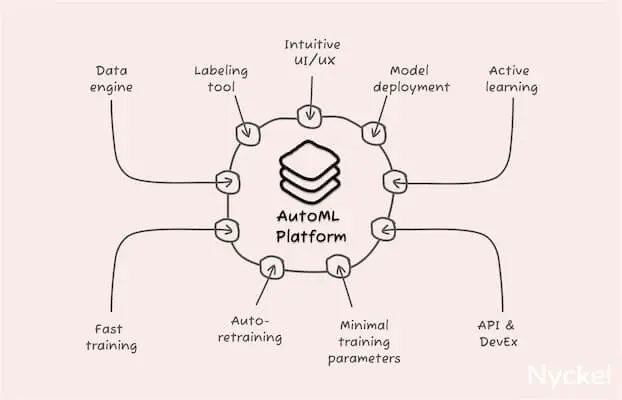

Nope, not any more. Even a few years ago, building an ML model was exclusive to experts. But now there are AutoML (automated machine learning) platforms tools that abstract the technical complexity. Some ML experience is helpful, but mainly around understanding best practices with annotation, sample preparation, and fine-tuning.

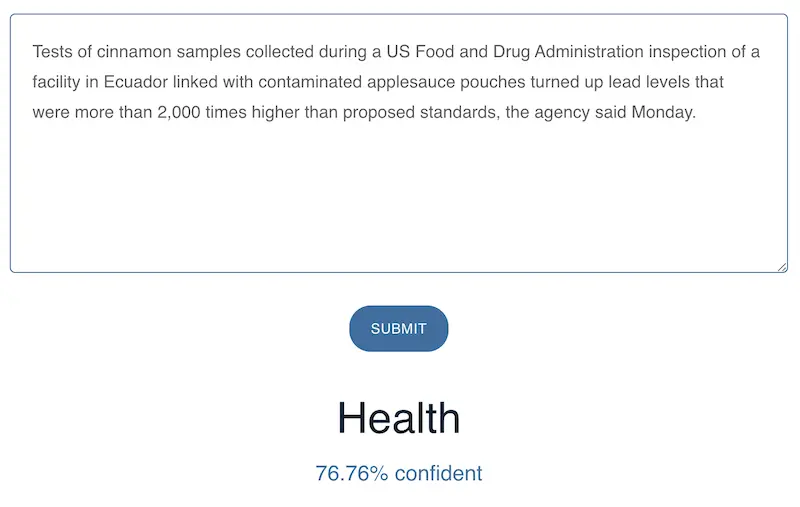

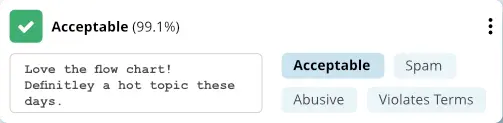

What is a classification confidence score?

Classification models are effectively making a guess off their training data. The confidence score they return is a rough approximation of how confident the model is that it guessed correctly.

Confidence levels range from 0 to 1, but they’re often presented as a percentage (0% to 100%). The closer to 1, the more confident it is in the label.

These scores are probabilistic and don’t mean the model is right or wrong. If the model is poorly trained, it might be highly confident in a wrong answer.

That said, if you’re confident in your model, the confidence score can be a useful tool. A social network, for instance, could use a hybrid moderation approach, where the model takes the first pass on user-generated text.

If it tags something as Pass/Fail with a high score of, say, 97%, then the tool would auto-allow or auto-block it. But everything else would go to manual review. If most text falls into this 97% bucket, they are saving themselves a ton of time.

Are there ethical considerations with classification?

As with any AI, classification runs the risk of misuse and biases.

Imagine a classifier that can identify sentiment toward country leaders. If that government could mine phone records / social networks / etc., it could go after anyone who has expressed discontent.

Of course, if you’re building a classifier to categorize, say, clickbait headlines, there’s little to worry about. Still, when building any type of AI, you should be cognizant of potential misuse risks.

What are the types of classification?

There are a few ways to break down classification. Let’s look at those:

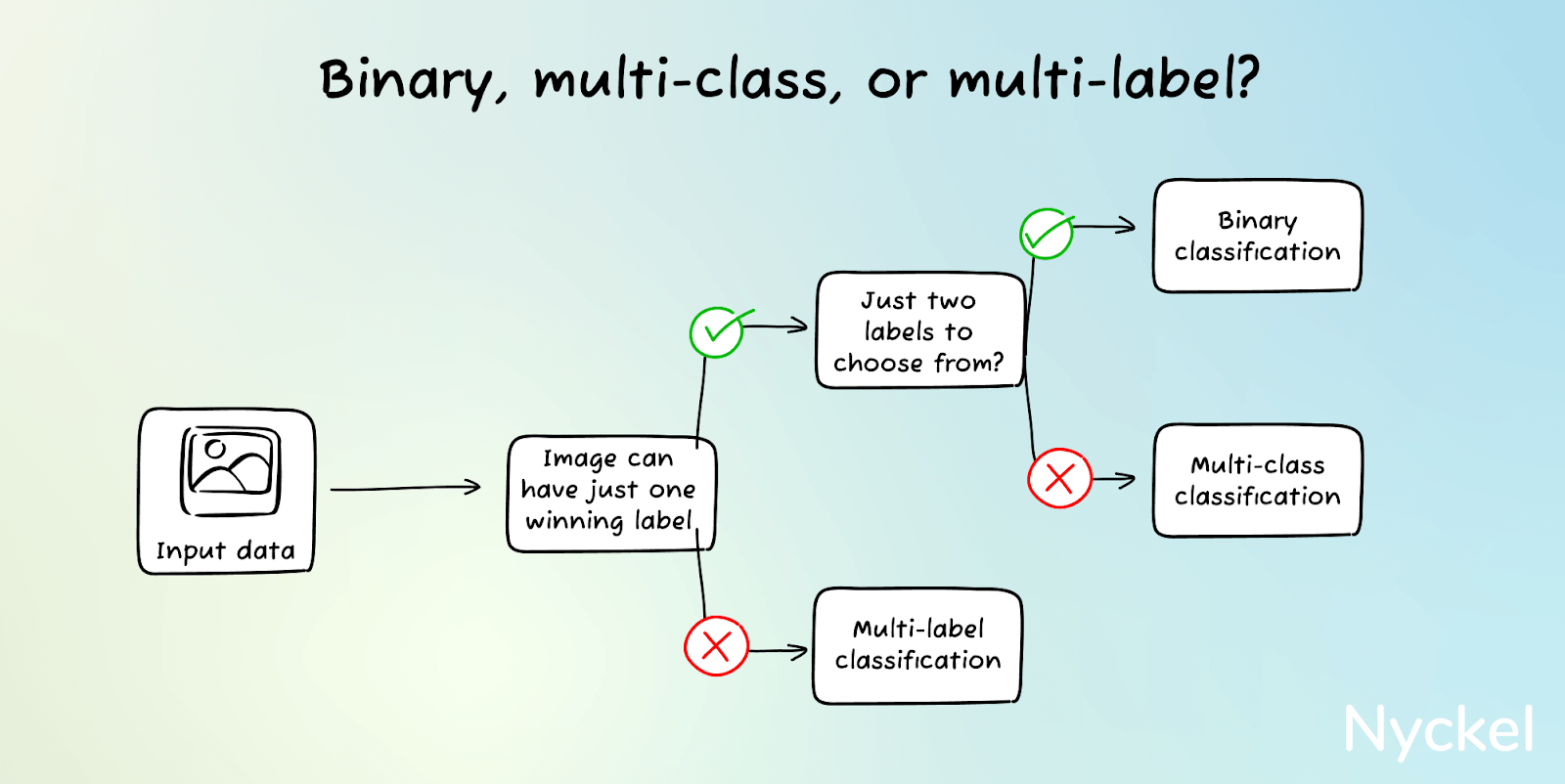

- Binary, multi-class, and multi-label - Denotes (1) the number of label options, and (2) how many classes the text can fall into.

- Hierarchical vs. flat - Refers to different ways to structure the classification algorithm.

- Supervised vs. unsupervised - Describes two approaches around label selection and annotation.

What is binary, multi-class, and multi-label text classification?

These terms highlight (1) the # of classes the model can choose from and (2) whether the text can be tagged with one or multiple classes (exclusive versus non-exclusive).

- Binary classification - Binary classification involves picking one winner out of two classes, such as Offensive / Not Offensive, or Support Issue / Not Support Issue. It can be as simple as “Is this a recipe?” to a more complex task like deciding whether an article is fake.

- Multi-class classification - Multi-class classification also involves just one winner, but instead of two possible classes, there are three or more.

An example includes a classifier that maps job title to job department. The title of “Head of Sales” falls under just one department (“Sales”).

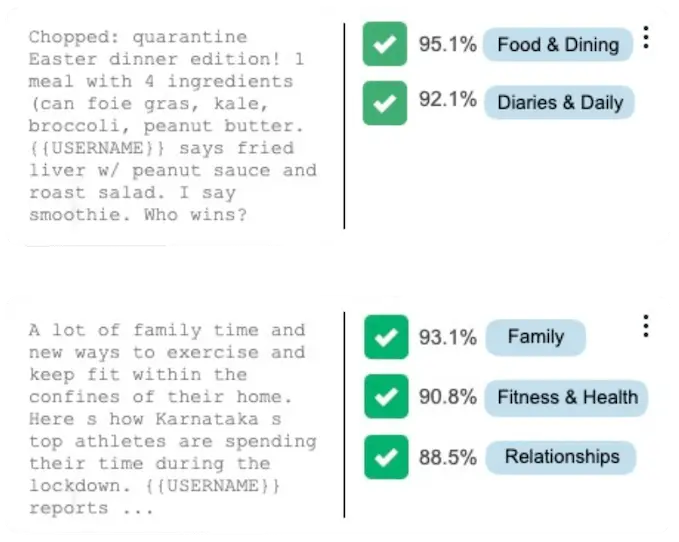

- Multi-label classification - With binary and multi-class, the class is exclusive, meaning the model will assign the input to just one class. With multi-label classification, the model doesn’t have that limitation. It can tag the text with multiple labels if needed.

For instance, you could have a classifier that tags SMS messages with topics. A short message like, “Today I drove my new Toyota to McDonalds” could be tagged as car-related and food-related.

| Binary | Multi-Class | Multi-Label | |

|---|---|---|---|

| Use when | The text can fall under just 1 of 2 predefined categories | The text can fall under just 1 of 3+ predefined categories | The text can have multiple non-exclusive tags |

| Use cases | Identifying spam (yes/no) | Identifying language (English, Spanish, etc.) | Tagging topics |

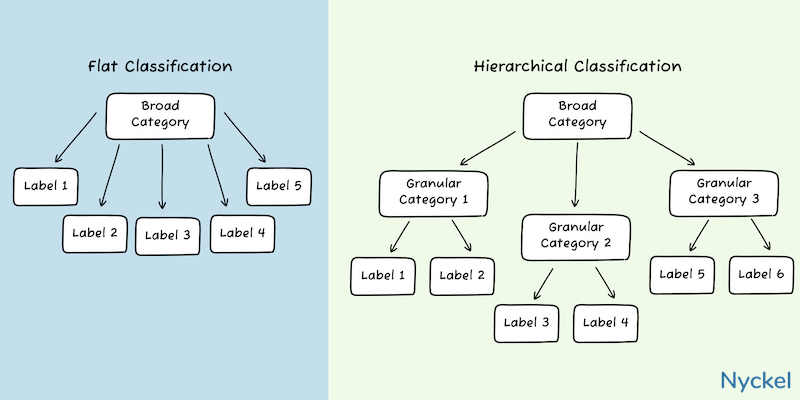

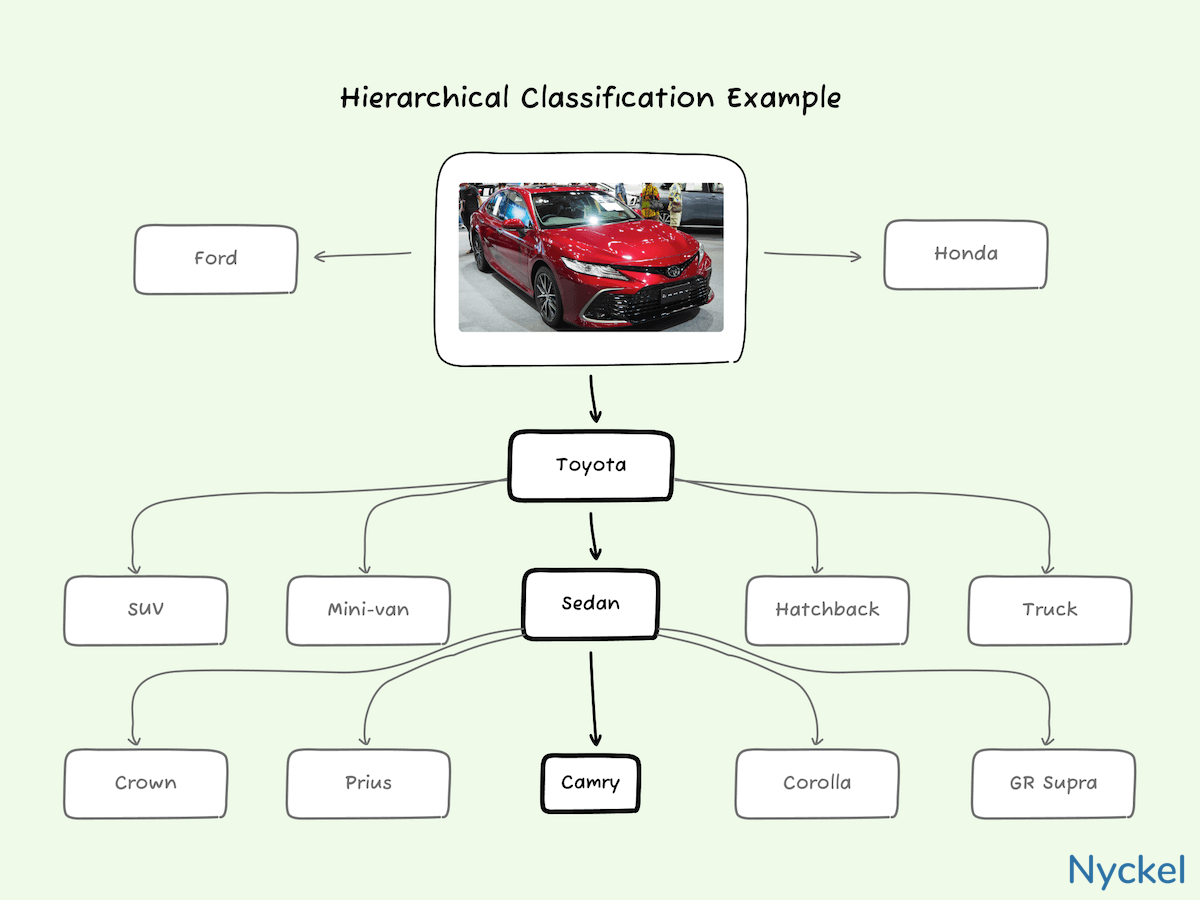

What is hierarchical vs. flat classification?

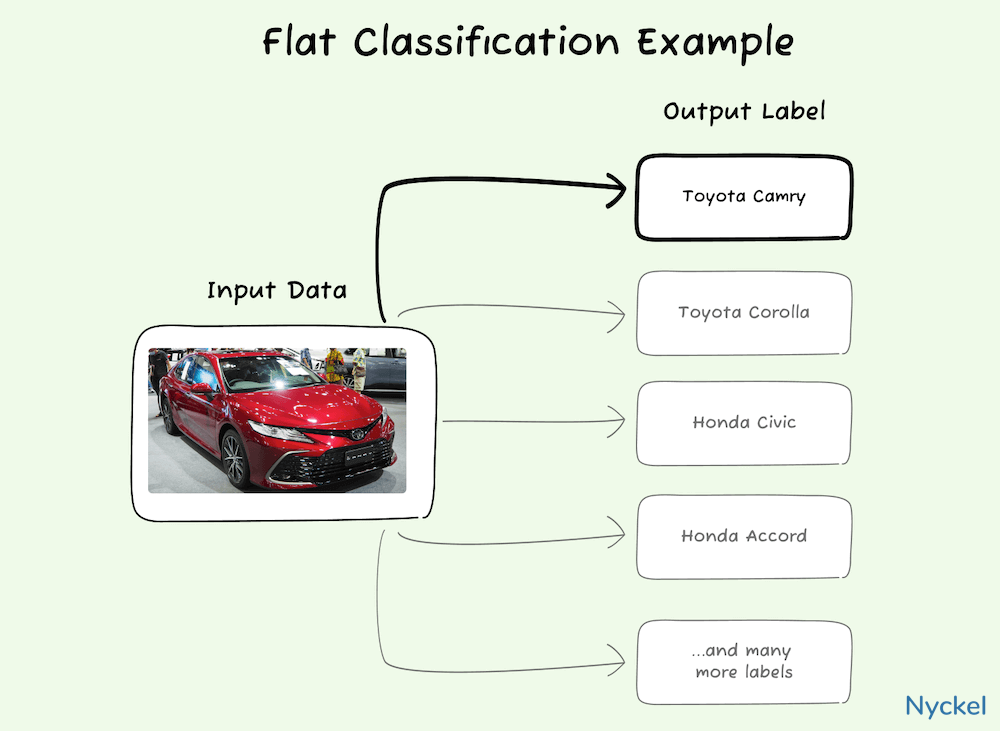

Hierarchical vs. flat classification refers to different ways to structure the classification algorithm. With flat, the model treats all categories independently, while hierarchical involves multi-level category branches, each with different cascading models.

A classifier to identify news article content, for instance, is a great candidate for hierarchical classification. Here, you could have two levels, with the first model looking for high-level category like Sports, Current News, Food, etc. Then, depending on which label it falls into, the text is re-run through a different branch-specific model. If the model predicted Sports, for example, the text would run through a new Sports-specific model, which may have classes like Football, Baseball, Soccer, etc. The final predicted label may be Sports (Football).

With flat classification the algorithm would instead look at all categories (Sports-Football, Sports-Soccer, Food-Recipes, Current News-US, etc.) before picking a winner.

To better illustrate this, let’s use the IAB (interactive advertising bureau) content taxonomy. It’s used by advertisers/publishers for contextual ad targeting. Their 3.0 taxonomy has 40 Tier 1 topic categories (Careers, Education, etc.), and those categories have subcategories, leading to 350 Tier 2 content categories. Your goal is to build a model that can identify articles about private schools.

-

With flat classification, the algorithm would have 350 different classes to choose from. It would ingest the content and look across all those labels to determine a winner.

-

With hierarchical classification, the algorithm would first look for the high-level category (40 classes). If it correctly identifies it as Education, it would rerun the text across just the 12 subcategories under Education (one of which is Private Schools).

Here are some considerations around which algorithm to use:

| Hierarchical | Flat | |

|---|---|---|

| Use when | You have a high number of classes that can also be structured into logical hierarchies | You don’t have many classes and/or classes can’t be organized into logical branches |

| Pros | It can be easier to troubleshoot, as you can identify at which level there are accuracy issues. If the hierarchies are well-defined, it will also likely be more accurate |

It’s simpler to set up, and for models without many classes, requires less computational work. It also involves less pre-labeling of training text (one level vs multiple) |

| Cons | More work to set-up and requires multi-level labeling of each training text | With many classes, identifying the holes in the model can be difficult |

| Use cases | IAB content taxonomy | Likely gender from a text |

What is supervised vs. unsupervised classification?

Supervised vs. unsupervised classification describes two approaches you can take around data labeling and category selection. Specifically, supervised models involve defining your own labels, while the model does it for you in unsupervised.

-

Supervised classification involves training a model on a prelabeled dataset using a predefined list of classes. So, you are picking the labels and telling the system what text falls under each. This classification method is the one this article assumes you’re using.

-

Unsupervised classification, on the other hand, involves uploading unlabeled training data and having the model come up with its own categories. It’s useful if you have a large dataset and want to discover hidden patterns.

Some use cases of unsupervised include:

-

Topic discovery: A common use case of unsupervised text classification is topic modeling and discovery. After uploading the dataset, the model will discover underlying themes that may have otherwise been missed.

-

Document clustering: You could group similar documents together without first having to manually label them. Think: receipts vs invoices vs personal letters.

-

Anomaly detection: You could also upload data and see if the model automatically detects anomalies like spam, fraud, or unusual patterns.

The downside is that since the categories are not predefined, they are often not as precise or meaningful as supervised classification.

Considerations for each include:

| Supervised | Unsupervised | |

|---|---|---|

| Use when | You care about specific classes | You want text grouped but don’t have specific categories in mind |

| Pros | You get to define the labels | It doesn’t require time-intensive labeling. Can also unearth hidden patterns |

| Cons | Labeling text can take time, and a highly accurate model can require a large dataset | You must use whatever categories the model chooses. Hard to measure accuracy |

| Use cases | Content moderation | You have thousands of long documents and want the model to cluster them as the model sees fit |

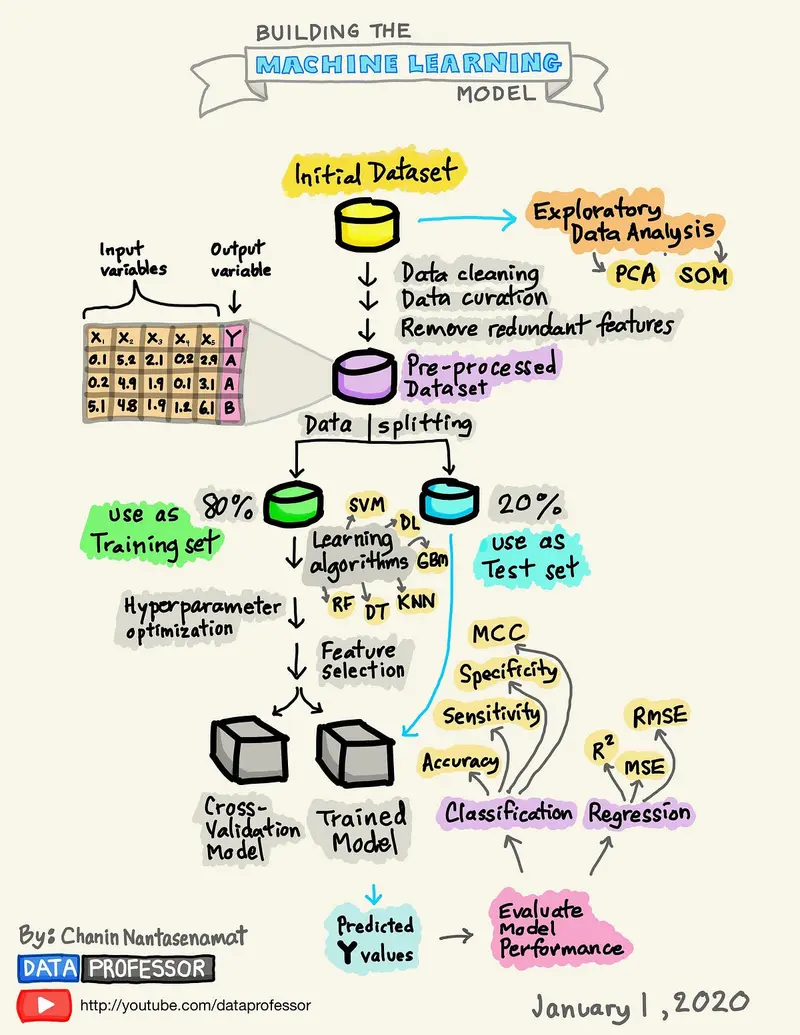

At a high level, how does building a classifier work?

The process includes:

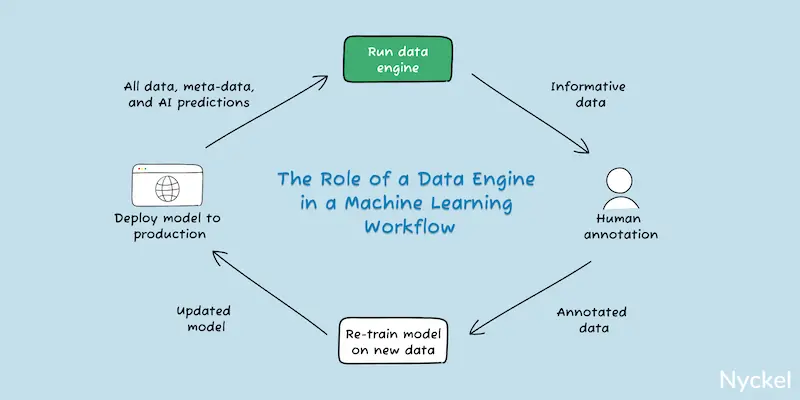

- Collecting training samples - To train a model, you need pre-labeled samples. You’ll also want to partition your content into three buckets: training, validation, and test.

- Picking a tool to build your model - Your main options are building from scratch using a machine learning framework like Tensorflow or integrating with an AutoML classification tool. We touch upon these methods later on.

- Training the model - You’ll upload the training content, wait for it to train, and then test it. Additional fine-tuning will likely be needed to hit an ideal accuracy.

- Integrating the model into your workflow - Once training is done, you’ll need a way to deploy the working model into your application. Most AutoML vendors provide this via APIs.

How do I find training samples?

A model is only as good as its training data. It’s important, therefore, to have a robust set of labeled data to train with. This is easier said than done, but below are some paths you can take:

- Use internal data - If you’re building a classifier with proprietary internal data, your text samples will likely come from your own database. To tag these, you can:

-Use current metadata - Businesses often have metadata already tied to database rows. If you do, you can parse this data into separate classes.

-Do it manually in-house - If you have untagged content, you could manually label them yourself.

-Outsource tagging - You could also outsource this manual annotation to a third-party.

-Use assisted learning tools - These solutions assist in labeling by suggesting potential classes based on textual analysis. Your team can then review, refine, and confirm these labels.

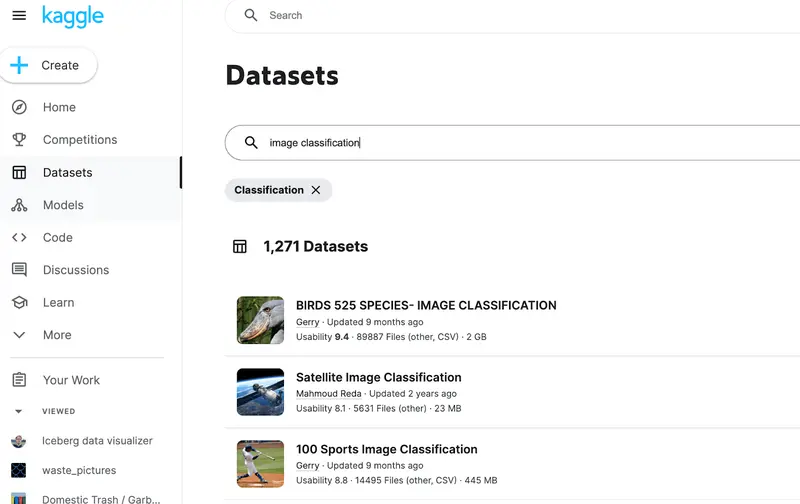

- Use existing datasets - There are many free downloadable datasets on sites like Kaggle, Roboflow, and Papers with Code. These are good options if you’re looking for generic data like offensive words or document types. More robust datasets can also be purchased from data suppliers.

How many training samples do I need?

There is no set rule for how many samples you need to build a working model. It could range from four to four million plus. But below are things to keep in mind:

-

Number of labels - The more labels you have, the more samples you’ll need.

-

Discreteness of labels - If the classes are quite distinct, you’ll need fewer samples as compared to classes that are more nuanced. For example, distinguing between Sports and Food content categories is easier than differentiating between Youth Sports and Pro Sports. With the latter, the model may need many more samples to reach high accuracy.

-

The tool you’re using - Some architectures are more data-efficient than others. Nyckel, for instance, lets you build a classifier with as few as 2 samples per label.

-

Your target accuracy - As a rule of thumb, adding more training data will improve the model. So, to hit 95% accuracy, you’ll need more samples than a 60% target.

That said, in Nyckel’s recent training data analysis, building a classifier with 80% accuracy took as few as 13 samples per class. Most of the classifiers then leveled off at 90% even with 100s of additional samples - indicating there’s quick diminishing returns as early as 10-15 samples per class.

This held true across industries and inputs (images and text), although more samples were generally needed for fine-grained classification.

How do I prepare my data for training?

There are a few strategies to ensure your text is ready to upload:

-

Mirror your end use case - Ensure your data is as similar to the actual use case as possible. If your social media app, for instance, limits post to 100 words, don’t train the model with 500-word posts.

-

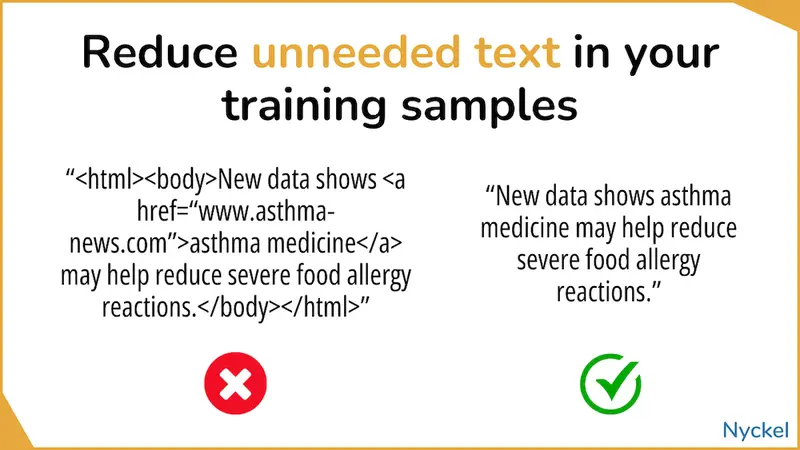

Reduce unneeded text - Your training samples should focus just on the text you want classified.

-

Balance the # of samples in each class - Each class should have roughly the same number of text samples. Otherwise, the model may be biased toward the larger one.

-

Audit your data - Finally, audit your text samples to ensure the labels are accurate and that nothing is missing or irrelevant.

What’s the difference between training, validation, and test samples?

Before training the model, it’s best practice to segment your text into three buckets: training, validation, and test.

- Training data - Annotated (labeled) data used to train the model.

- Validation data - Additional annotated data used to test and fine-tune the model during training. They help in identifying weaknesses in the algorithm, so you can improve it as needed. While they influence the training process, these samples are never used for training.

- Test data - Additional annotated data used only after the model is done. They provide an unbiased evaluation of the model’s performance, as they are never used for training or fine-tuning.

Both validation and test samples are important because testing a model with the same data that it was trained on can lead to overfitting, where the model works well for its training data but poorly for new text. But since these samples aren’t used for training, they are an independent snapshot of the model’s accuracy on new data.

You should set aside roughly 10% of samples for testing and 10% for validation. It does depend on your total samples too. The more samples you have, the lower your overall percentage needs to be.

How do I evaluate my classification model?

There are many ways to evaluate your classification model and determine if it’s ready for production.

Accuracy

Evaluating your model generally involves tracking its accuracy (the percent of times it tags the text correctly). Ways to measure this include:

- Accuracy against training dataset - Many classification tools will report on the model’s accuracy against the training dataset. Meaning, it will run the model against the already-uploaded training samples and tell you what % of predictions match the actual labels. While this provides a useful gut-check, you shouldn’t rely on this metric to assess accuracy.

- Accuracy on validation/test samples - As touched upon above, it’s good to set aside text samples for testing that are never used for training.

- Accuracy in practice - You can also deploy the model and then just track its performance on real data.

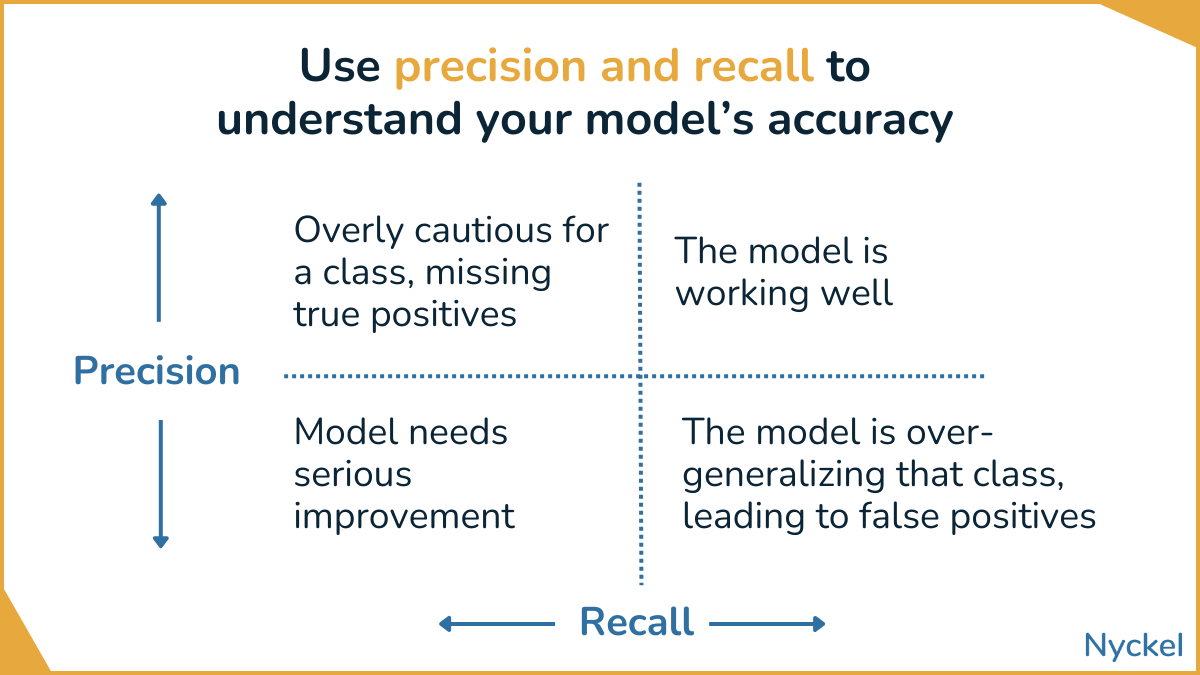

Precision and recall

Other ways to evaluate your model include precision and recall, which focus on specific classes.

| Accuracy | Recall | Precision | |

|---|---|---|---|

| What it measures | The % of times it selects the right label across all text instances | The % of times it selects the right label across text instances in a specific class | For a specific label, the % of times it predicted it correctly versus all times it picked it |

| Useful for understanding | The overall accuracy of the model | The accuracy within a certain class | How reliable the model is for a specific class |

To visualize this, let’s create a surprisingly inaccurate text classifier around, “Written in English or Not” containing 100 text samples.

| Actual Label | Total Samples | Predicted as English by Model | Predicted as Not English by Model |

|---|---|---|---|

| English | 40 | 30 (correct) | 10 (incorrect) |

| Not English | 60 | 20 (incorrect) | 40 (correct) |

- English Precision - Correctly-labeled English divided by total times the model picked English = 30 / 50 = 60%

- Not English Precision - Correctly-labeled Not English divided by total times the model picked Not English = 40 / 50 = 80%

- English Recall - Correctly-labeled English divided by total English instances = 30/40 = 75%

- Not English Recall - Correctly-labeled Not English divided by total Not English instances = 40/60 = 67%

These metrics can help you troubleshoot your model. Watch for classes with:

- Low recall, low precision - The model not only fails to get a given class correct, but it frequently assigns that label to the wrong class.

- Low recall, high precision - When the model predicts that class, it is generally correct, but it’s not selecting it as often as it should.

- High recall, low precision - The model is accurate within a certain class, but at the cost of incorrectly labeling too many other text instances (“false positives”). In other words, the model is picking that class often, whether or not it’s correct.

- High recall, high precision - The model is not only good at identifying a given label correctly, but rarely selects that class when it shouldn’t too. This is what you’re aiming for.

To fix instances of low recall, you’ll want to prioritize adding more training samples for that class. With high recall but low precision, you should review the class’s text/labels to ensure they capture just what you want.

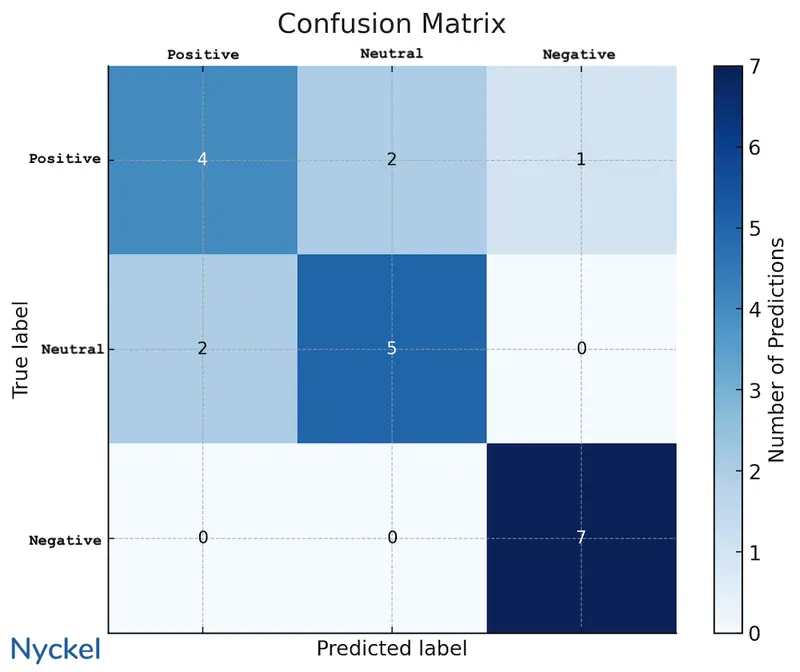

Confusion matrix

A confusion matrix is a table for easily understanding holes in your classification model. It tracks the relationship between actual label and predicted label.

(The English table above is a simple binary confusion matrix.)

Below is an example confusion matrix for a text sentiment classifier with three labels: Positive, Neutral, or Negative.

The matrix is helpful in quickly seeing that the model is good at correctly identifying negative sentiment, but it’s mediocre at differentiating between neutral and positive (of the 7 times the model predicted positive, 28% of the time it was actually neutral).

This is data that can help you troubleshoot and improve the model. In this instance, questions to ask are:

- Have I labeled the samples correctly? An incorrectly-labeled training sample can skew the model.

- Are the training samples for each text similar? Both would ideally be similar lengths / same language / same structure / etc.

- Do I have enough training samples? There’s only 7 samples for each, so an obvious first step is to add more training samples for these two classes.

What’s a good accuracy for a text classification model?

Your ideal accuracy will depend on your use case. If you are classifying legal documents for a trial, you may care about accuracy more than someone sorting their SMS text messages for fun.

It’s also good to understand what the human accuracy baseline is (aka, how accurate humans are in labeling the text). If humans are right 99% of the time, you should be aiming for 99%.

But in some cases, like classifying sentiment, a human may not be great - or there may not be agreement in nuanced situations (“I sorta liked it”) - so, in these cases, you may want to focus more on confidence levels than accuracy.

How do I launch my own text classifier?

Your main options for creating your own text classifier include:

- Build your own

- Use an AutoML classification vendor

- Use a pretrained classification API

Build your own

You can build your own classification model using a variety of tools. Theoretically you can build it entirely from scratch by building your own algorithm and connecting directly with a Convolutional Neural Network (CNN), Recurrent Neural Networks (RNNs), transformers, or a similar model. But most likely you’ll abstract some complexity by integrating with a ML framework like PyTorch or Keras and/or use a ML-Ops infrastructure tool like AWS SageMaker. These let you fully customize your model while simplifying more technical aspects.

Pros:

- Full control - You have complete control over the model, from the architecture to the training process.

- Cost-effective - Hosting the model yourself is generally cheaper than working with a vendor. That said, opportunity cost is a factor here if it’s time-consuming.

- Community support - These tools have lots of resources and documentation.

Cons:

- Time-intensive - It requires a significant time and effort commitment.

- Requires experience - This path is a non-starter if you don’t have extensive machine learning experience already.

- Limited UIs - Without these, it’s harder to visualize performance and improve the model.

- Hard to troubleshoot - When handling everything including data preprocessing, model design, training, and evaluation, it may be difficult to troubleshoot issues.

- Scalability - If you’re handling millions or billions of classifications, it could be hard to maintain uptime.

Using an AutoML classification platform

You can also launch a classifier with an AutoML machine learning tool. They abstract the work involved — such as algorithm design — which reduces time to deployment. They also generally include a UI for sample management and accuracy tracking.

Pros:

- User-friendly - UIs make it easy to track your progress, identify inaccuracies, and fine-tune as needed.

- Quick to deploy - You can deploy a model in less than a day (with some, in just minutes).

- Don’t need to be a ML expert - While the complexity will vary by tool, these solutions nonetheless aim to minimize the ML complexity.

- Scalable - The vendor, not you, will worry about scalability and uptime.

Cons:

- Cost - Can be expensive, especially for high-volume use cases.

- Less customization - You don’t have as much control over the underlying algorithm or how the model is structured.

- Data privacy - Any time you send data to a third-party, there are security risks.

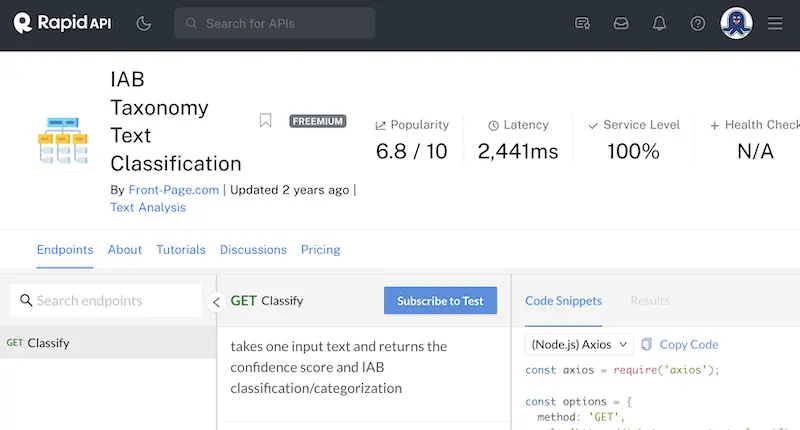

Use a pretrained classification API

Rather than building your own classification model (which requires training samples), you can also always hook into a pretrained classifier, like those offered on ChatGPT, Zyla, Rapid, and Nyckel. These generally operate as black boxes, where you ping the API with the text, and it’ll return the label and confidence score. While not customizable or ideal for bespoke use cases, they can be good for commoditized datasets, like offensive words.

Pros:

- Can integrate immediately - Can take just minutes to integrate.

- No machine learning knowledge needed - If you can code to an API, you’re good.

Cons:

- Limited to no fine-tuning - If these models don’t have the accuracy you want, you can’t improve their accuracy.

- Limited number available - On Zyla, for instance, there are just 20 text classification APIs available, many of which do the same type of analysis.

- Not for custom use cases - These are useful for certain situations — like generic emotion detection - but won’t work for proprietary data.

5 best text classification solutions

Below looks at the top 5 platforms for building your own text classification model.

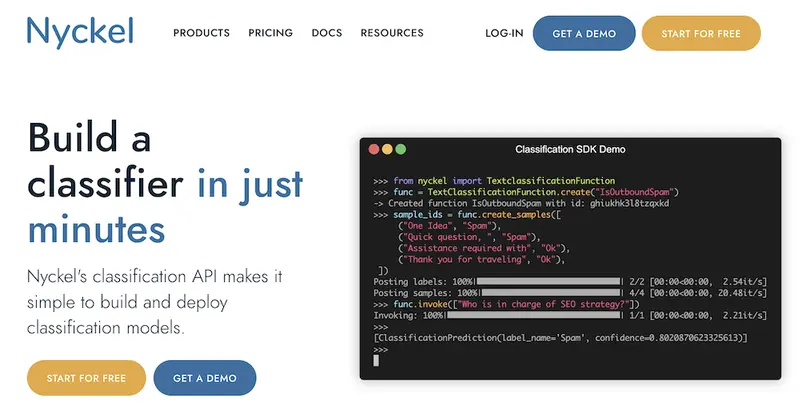

Nyckel

Nyckel provides a classification API that makes it easy to create and deploy text classification models in just minutes.

Pros:

- Real-time training - The model automatically updates after every change, making it easy to fine-tune and iterate.

- Elastic Pricing - You only pay for usage versus the more-confusing “always-on” node pricing that most tools use.

- API-approach makes it developer friendly - Many AutoML tools are not API-first, forcing customers to work through the UI. Nyckel has API / UI parity.

- Streaming API - Unlike other batch API tools, Nyckel’s API offers real-time creation of functions, uploading samples, and invoking.

Cons:

- Not as many bells and whistles as other solutions - Because Nyckel focuses on supervised classification, they don’t offer every feature, such as document clustering.

- No customization of model’s algorithm - The decision to abstract the machine learning complexity does mean there’s no way to adjust the foundational model algorithm.

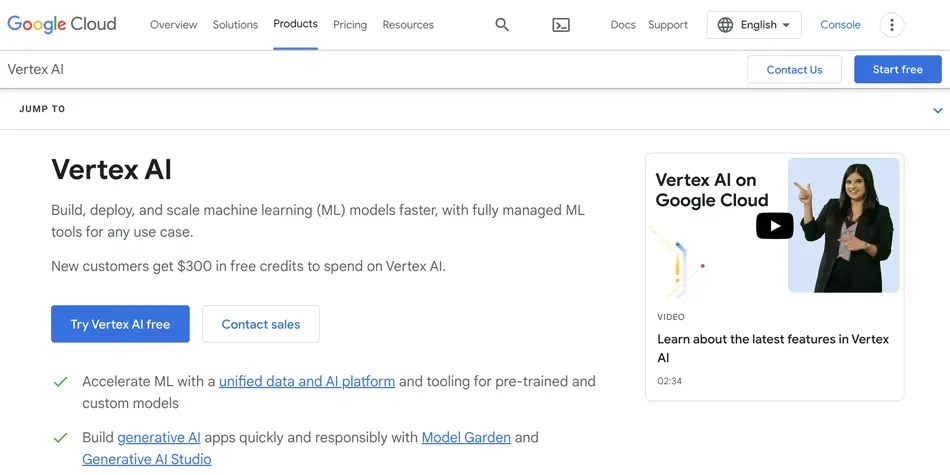

Google’s Vertex AI

Vertex AI is Google’s AutoML tool, enabling classification alongside other model types. While powerful, it comes with a heavy learning curve, and the initial setup can be complex. But its integration with the broader Google ecosystem can be advantageous for those willing to invest time in learning the tool.

Pros:

- Rich library of help docs - Google does a great job at creating explainer videos and guides if you get stuck. Additionally, there’s a broader Google community that actively shares ideas and recommendations.

- Backed by Google - Nobody got fired for buying from Google. It’s a safe route, even if not the easiest.

Cons:

- Learning curve - Unless you are already well-versed in Google’s developer products, the platform can be tough to navigate.

- Slow to train / build - Each time you want to train a custom dataset, even for a small dataset, it can take hours. Larger datasets can take 24 hours or more. This makes real-time fine-tuning impossible.

Amazon Comprehend

Amazon offers its own natural language processing solution called Comprehend. While the setup process can be tedious, the platform’s interface simplifies the training process. However, it does require coding for deployment, so it’s not ideal for those without coding expertise.

Pros:

- Great for ML experts - Amazon provides granular controls for manipulating your algorithm and models. If you know how to work them correctly, you get a lot of flexibility.

- Backed by Amazon - You can trust that Amazon will continually improve their product and offer extensive integration guides.

Cons:

- Arduous set-up - Unlike more turnkey AutoML vendors, Comprehend is confusing and time-consuming to set-up. Deployment often requires complicated coding versus a simple API call.

- ML experience is not a must, but it’s recommended - The flipside of a robust feature set is that managing them can be difficult unless you understand ML well.

Keras / Tensorflow / PyTorch

These three tools are all open-source machine learning frameworks. They make building your own machine learning model simple, but they don’t abstract it completely, so you still need a deep understanding of ML to launch.

Pros:

- Control - You can adjust nearly all parts of the model. Compared to the AutoML vendors on this list, you have much more control over the nuances of the algorithm.

- Cost-effective - Outside of opportunity cost, hosting your own model tends to be cheaper than a third-party vendor.

Cons:

- Requires ML experience - If you don’t have a machine learning background, it’ll be hard to get going.

- Time-consuming - Even if you have ML experience, there’s a major time and effort commitment.

Rapid / Zyla

These are two pretrained API marketplaces, and they provide access to some text classification models. They operate as black boxes, where you ping the API with the text, and it’ll return the predicted label.

Pros:

- Fast to launch - Can take just minutes to integrate.

- No ML experience needed - You must be able to code to an API, but that’s it.

Cons:

- No fine-tuning - If these APIs are inaccurate, you can’t improve their accuracy yourself.

- Limited number - On Zyla, for instance, there are just 16 text classification APIs available.

Text classification next steps

Text classification can be daunting, but third-party tools can reduce the complexity of launching your own model.

If you’d like to dip your toes into text classification, we recommend Nyckel. Launching your own classifier takes just minutes, and you can build them through the UI or the API.